What Is AI Ethics for SMEs?

AI ethics is about using artificial intelligence responsibly. That means protecting privacy, reducing bias, being transparent about how decisions are made, and making sure someone is accountable.

For SMEs, this isn’t just about ticking compliance boxes. It’s about proving to clients, investors, and staff that your business uses AI in ways that are fair, secure, and trustworthy.

Why Does AI Ethics Matter for Small Businesses?

SMEs often move faster than larger organisations – but speed can’t come at the cost of trust. Ethical AI helps small businesses:

- Protect customer relationships and brand reputation.

- Avoid fines and penalties from GDPR or the EU AI Act.

- Prevent issues like shadow AI or bias creeping into decision-making.

- Reassure boards, clients, and investors that AI is being used responsibly.

What Are the Core Principles of Ethical AI for SMEs?

How can SMEs Protect Data Privacy and Security?

Keep personal and business data encrypted, limit access to sensitive information, and always get consent before collecting or using customer details. Tools like mobile device management (MDM) and data loss prevention (DLP) help SMEs secure data across Apple, Microsoft, and Google environments.

How Do You Reduce Bias in AI Systems?

Bias can creep in if AI learns from incomplete or skewed data. Regularly test outcomes, use diverse training sets, and add human checks for high-impact areas like hiring or lending.

Why Are Transparency and Explainability Important?

Staff, customers, and regulators should be able to understand how AI decisions are made. Avoid “black box” systems where you can’t explain an outcome, especially in finance, HR, or compliance.

Who Is Accountable for AI Decisions?

Always assign ownership. If an AI system makes a mistake, someone in the business should be responsible for reviewing it, fixing the issue, and preventing it from happening again.

How Do You Ensure Reliability and Safety?

AI models degrade over time as conditions change, this is known as model drift. Test regularly, monitor performance, and update models to keep results reliable.

Which AI Laws and Standards Apply to SMEs?

GDPR and Data Protection Obligations

If you handle personal data, GDPR applies. It gives individuals rights over automated decisions and requires clear explanations, human review options, and strong data security.

What Is the EU AI Act and How Does It Affect SMEs?

The EU AI Act classifies AI by risk. High-risk systems (e.g. hiring, credit scoring, healthcare) must meet strict requirements for transparency, security, and human oversight. SMEs using these tools must be ready to evidence compliance.

Do International Standards Apply to SMEs?

Yes. ISO/IEC 42001 (AI management systems) and ISO/IEC 23894 (AI risk guidance) provide frameworks tailored to organisations of all sizes. SMEs can adopt proportionate controls without heavy overhead.

What About the NIST AI Risk Management Framework?

NIST’s RMF helps identify and reduce AI risks. It’s not mandatory in the UK/EU, but useful if you work with US partners or need a flexible governance model.

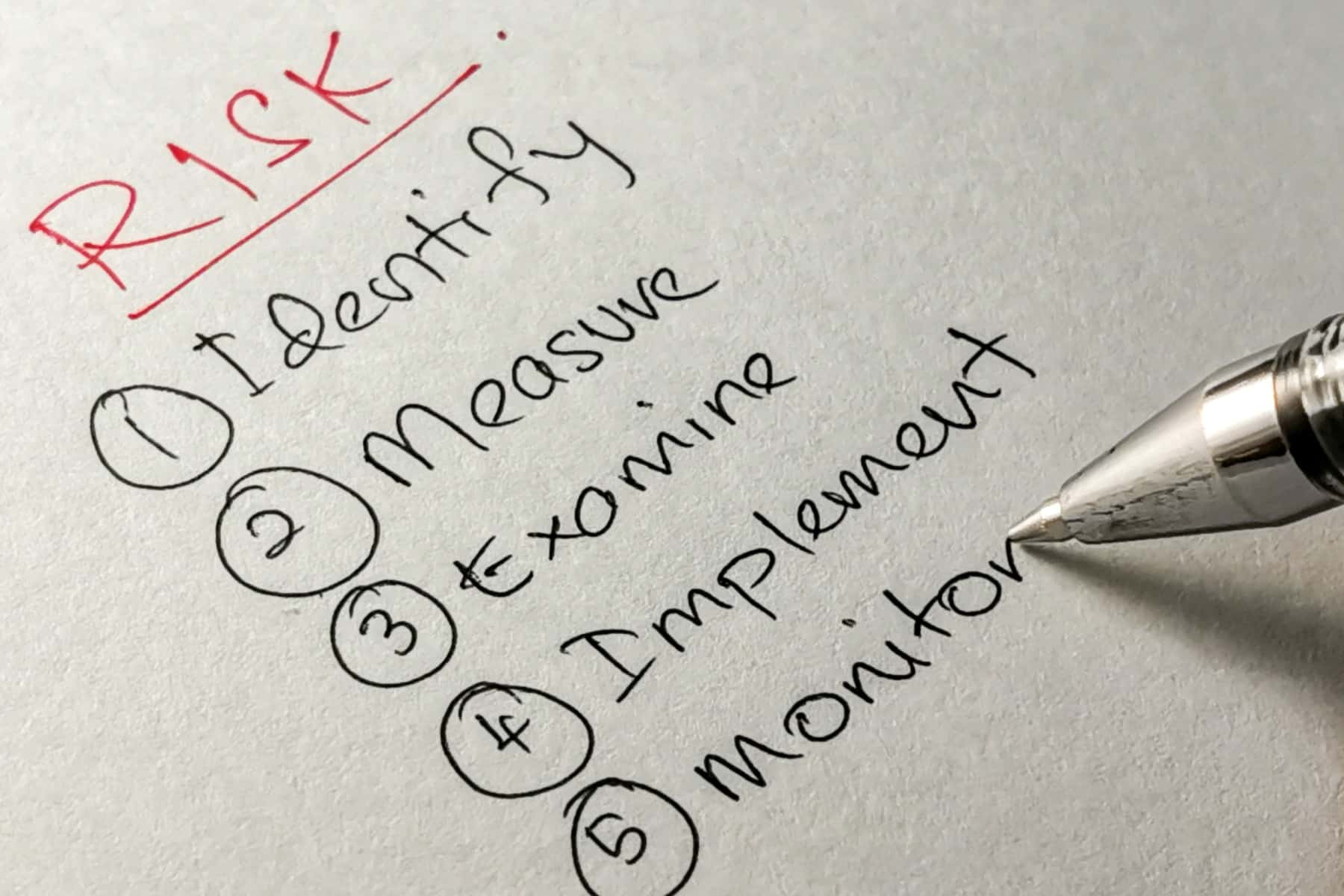

How Can SMEs Implement Ethical AI? (Step-by-Step Plan)

Step 1: Map AI Use Cases and Classify Risk

Audit which AI tools staff are using, including shadow AI. Map data flows to see where risks could arise.

Step 2: Write a Lightweight AI Policy

Keep it short and clear. List approved tools, prohibited uses, data handling rules, and escalation steps.

Step 3: Secure Data Across Apple, Microsoft, and Google Stacks

Use MDM, identity management, and least-privilege access to reduce the risk of data leakage.

Step 4: Check Vendors and Contracts

Review contracts carefully. Look at data processing agreements (DPAs), model training policies, and liability terms.

Step 5: Train Staff and Prevent Shadow AI

Give employees safe, approved tools. Explain the risks of uploading customer data into public platforms like ChatGPT.

Step 6: Monitor and Respond

Track performance, bias, and errors. Run regular audits and update policies when regulations change.

Where Does Ethical AI Impact SME Operations?

HR and Recruitment

Hiring tools must be tested for bias and reviewed by humans before decisions are finalised.

Marketing and Content

Generative AI can save time, but businesses should review outputs for accuracy, tone, and compliance.

Customer Support and Chatbots

AI support tools should disclose they’re not human and escalate complex cases.

Finance and Risk Scoring

Credit and fraud systems need transparency, SMEs should be able to explain how decisions are made.

How Should SMEs Select Ethical AI Vendors and Tools?

Choosing the right AI vendors is just as important as how you use the tools. A clear due diligence checklist helps SMEs avoid hidden risks and ensures partners meet the same ethical standards you hold yourself to.

- Data handling: Ask where data is stored, how long it’s kept, and how it can be deleted.

- Model transparency: Choose vendors who can explain how their models work, not just the results.

- Security certifications: Look for ISO/IEC 27001 or SOC 2, and always review DPAs.

- Contracts: Negotiate liability and exit terms in case pricing changes or a vendor folds.

How Do You Monitor and Improve AI Ethics Over Time?

AI governance isn’t a one-off exercise. Continuous monitoring, audits, and policy updates help SMEs stay compliant, spot risks early, and keep AI systems reliable as regulations and business needs evolve.

- Risk indicators: Track bias rates, privacy incidents, and compliance breaches.

- Audits: Run yearly reviews; quarterly for high-risk systems.

- Policy updates: Revise policies when new regulations like EU AI Act provisions come into force.

Quick Wins: A 30/60/90-Day AI Ethics Plan for SMEs

Getting started with AI ethics doesn’t have to be overwhelming. This simple 30/60/90-day roadmap gives SMEs a practical way to build momentum and show early progress without heavy overhead.

- 30 days: Map AI use, draft a lightweight policy.

- 60 days: Train staff, approve vendors, secure data.

- 90 days: Run your first audit and share results with leadership or clients.

Work With a Partner Who Understands Ethical AI

Rolling out AI in an SME doesn’t need to be complicated, but it does need to be responsible. At Dr Logic, we combine, IT support, IT strategy, cyber security, and innovation expertise to help businesses use AI with confidence. From writing clear AI policies to securing data across Apple, Microsoft, and Google environments, we’ll make sure your AI adoption is ethical, compliant, and built to last.

Protect your business and unlock AI’s potential with Dr Logic.

FAQs: AI Ethics for SMEs

What is AI ethics, and why does it matter for SMEs?

AI ethics ensures AI is used fairly, securely, and transparently. For SMEs, it builds trust and reduces legal risk.

Which AI regulations apply to small businesses?

GDPR, the EU AI Act, ISO/IEC standards, and NIST’s AI RMF may all apply depending on your sector and clients.

How can SMEs prevent shadow AI?

Approve safe tools, block unreviewed apps, and train staff to use approved platforms securely.

Do SMEs need an AI ethics committee?

Usually no. A clear policy with assigned accountability is enough for most SMEs.

What should an SME AI policy include?

Approved tools, prohibited uses, accountability, data protection rules, and escalation steps.

How do SMEs reduce AI bias?

Test outputs, diversify training data, and review decisions with human oversight.